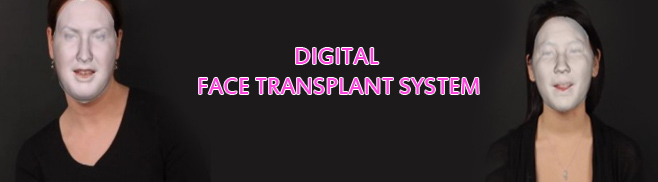

Big-budget special effects could soon be within the grasp of low-budget film-makers thanks to a new technique for automatically replacing one actors face with anothers.

Big-budget special effects could soon be within the grasp of low-budget film-makers thanks to a new technique for automatically replacing one actors face with anothers.

Face replacement is a much-used effect in Hollywood productions, even cropping up in realistic drama films such as The Social Network, in which two unrelated actors played a pair of twins. The complex and expensive equipment it requires, not to mention dedicated visual effects artists, have kept it out of low-budget movies, though.

No longer. We achieve high-quality results with just a single camera and simple lighting set-up, says Kevin Dale, a computer scientist at Harvard University who came up with the new technique.

Dale and colleagues start a face transplant with an algorithm that creates 3D models of each face. Their system then automatically morphs the image of the donors face to match the recipient, but that alone does not create a realistic-looking video – a joining seam is visible. One frame might look good, or many frames in sequence might look good individually, but when you play them together you get flickering,explains Dale.

So his system calculates the position on both actors faces where the seam will be as unobtrusive as possible. It also ensures that it does not jump around from frame to frame to avoid flickering. The whole process takes about 20 minutes to produce a 10-second video on an ordinary desktop computer and requires only a little manual interaction. Users can place facial markers on the first frame to generate the 3D facial model, but in some cases the system worked right out of the box, says Dale.

Hybrid performances

In addition to performing face transplants, Dale says directors could also use his system to blend multiple versions of an actors performance into a single scene. It can combine the mouth from one take with the eyes from another, for example, because it can match slight differences in movement between two videos. It can not handle every situation, however, and videos with complex or very different lighting wont match up well.

Its a step towards making this more automatic, says Paul Debevec, a computer graphics researcher at the University of Southern California in Los Angeles, whose work has been used in films such as The Matrix and Avatar. He says that Dales technique is unlikely to be used by film industry professionals, who can already achieve the same effect, but it could be made into a YouTube plug-in or a similar easy-to-use tool. He warns that people may struggle to match lighting between videos made at home, though.